Claudio Mayrink Verdun

Harvard University - John A. Paulson School of Engineering and Applied Sciences.

150 Western Ave, Allston, MA 02134

Hi! I am Claudio. Thanks for visiting my website and for your time.

I am a mathematician working with AI and machine learning at Harvard’s School of Engineering and Applied Sciences under the mentorship of Flavio Calmon. My research focuses on building the mathematical foundations of trustworthy AI, developing rigorous frameworks, algorithms, and theoretical guarantees for deploying AI systems safely and equitably. I harness tools from optimization, statistics, information theory, and signal processing to advance both theory and practice. I am currently most excited about inference-time alignment, interpretability, fairness and the science of generative AI evaluations, and the economic implications of AI deployment.

Some snippets of my research:

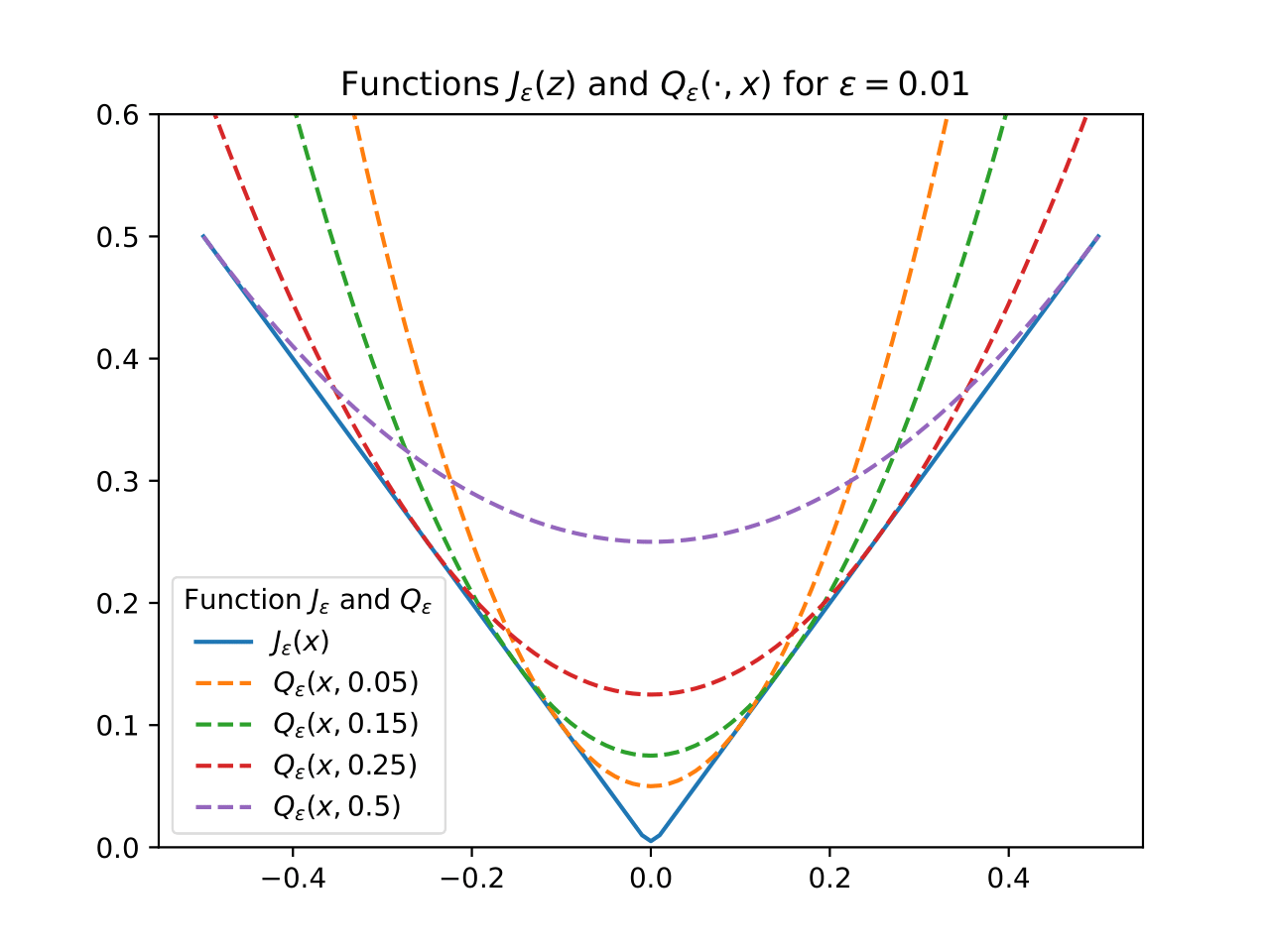

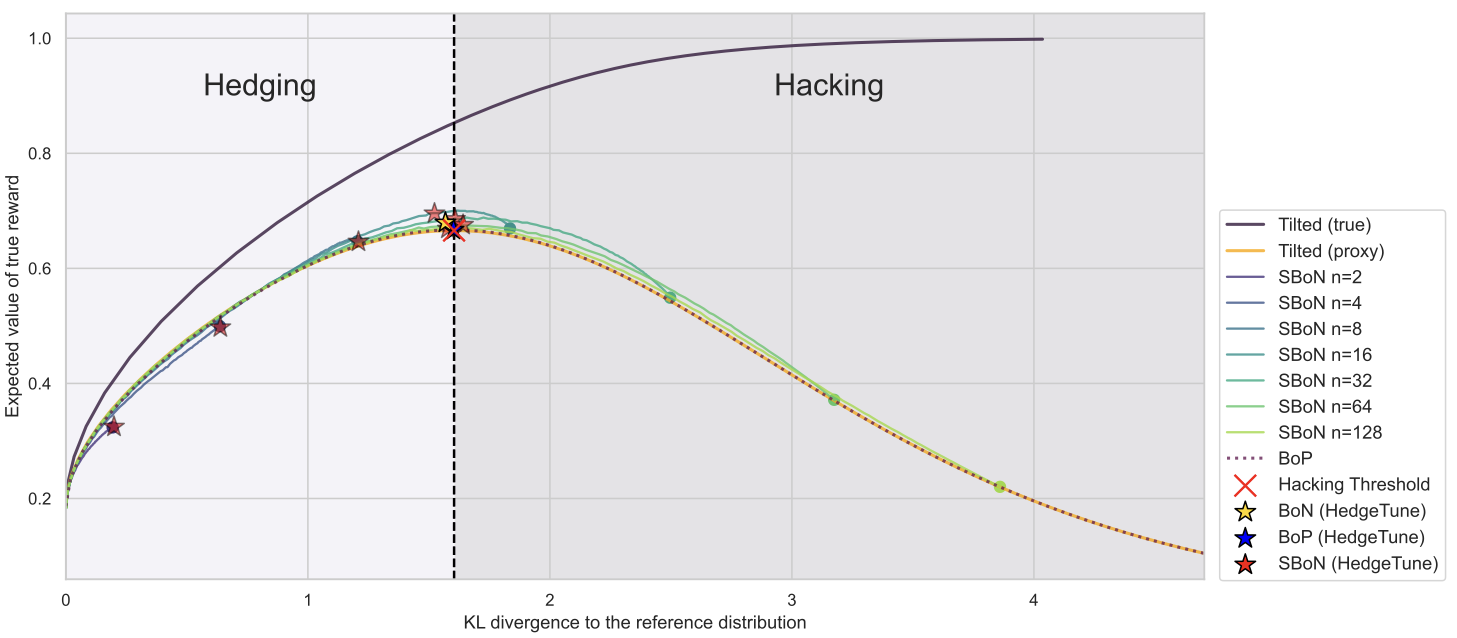

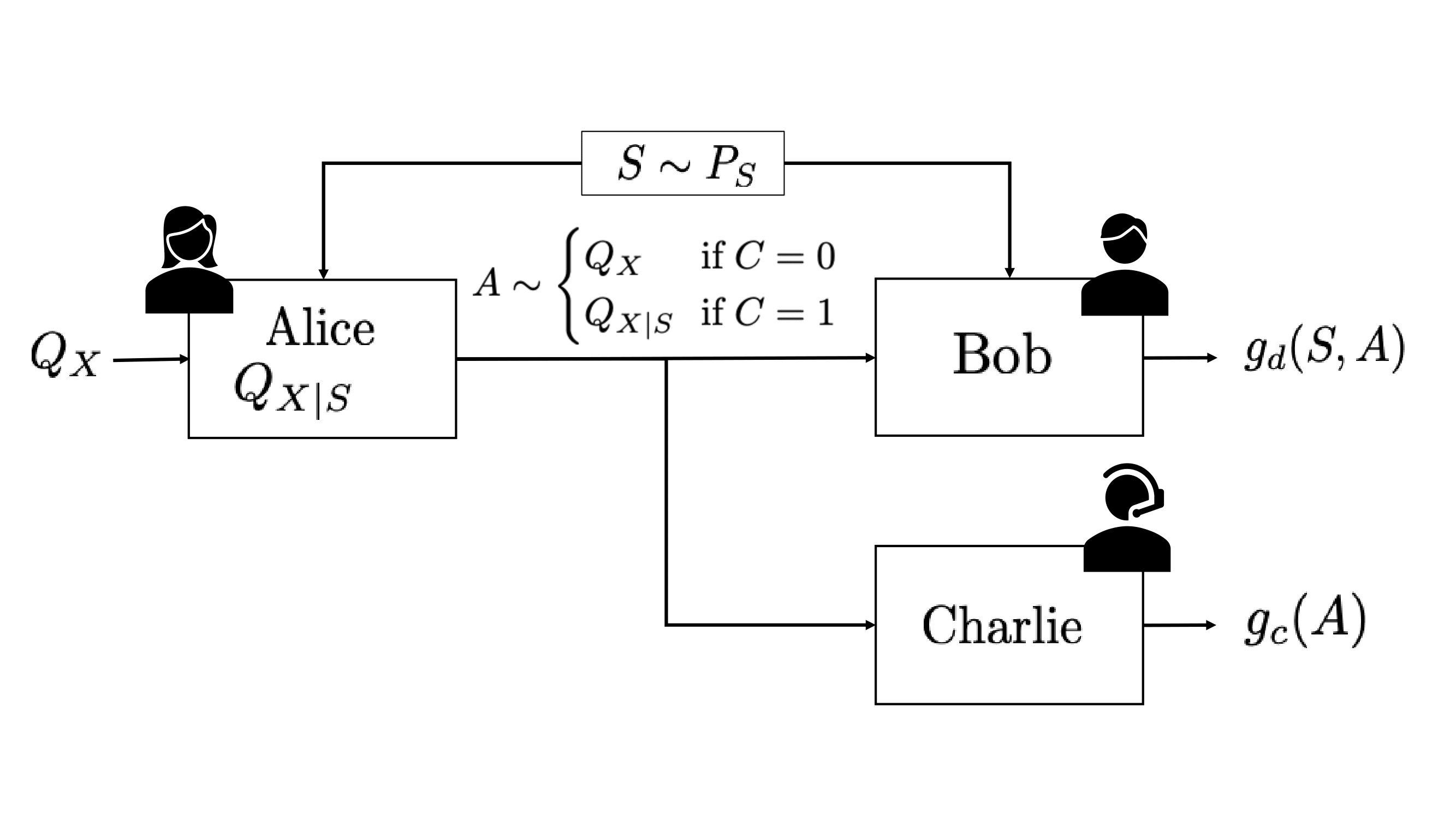

Inference-time alignment and post-training. I develop principled methods for aligning AI systems at inference time, without additional training. This includes introducing Soft-Best-of-n sampling and introducing Best-of-Poisson and establishing theoretical frameworks for reward hacking in inference-time methods.

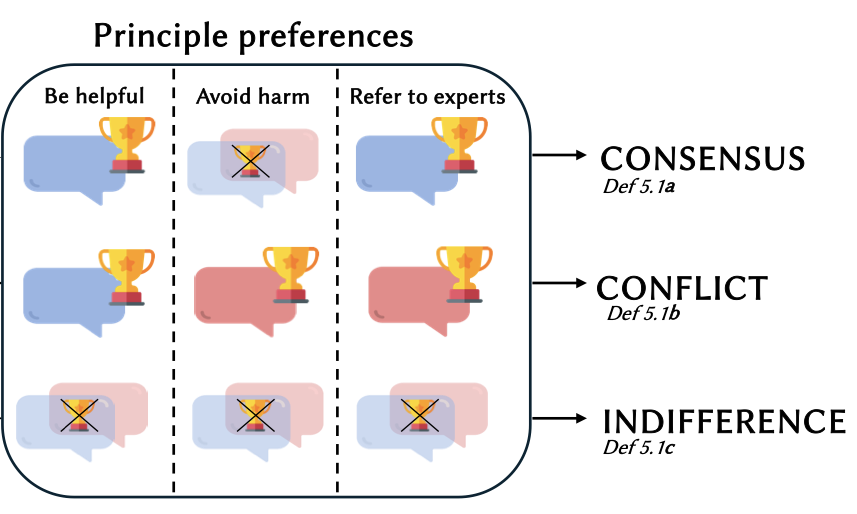

Fairness, accountability, and discretion in AI. I work on rigorous fairness guarantees for generative systems, emphasizing intersectional equity. My research includes developing methods for measuring representation across intersectional groups in retrieval and generative models, devising provably robust watermarking schemes for LLM provenance and accountability, and formalizing how values and principles are interpreted when safety rules conflict or are ambiguous.

Interpretability and sparse representations. I develop techniques to elucidate the inner workings of large models, particularly through sparse autoencoders and parsimonious representations. My work includes p-annealing for training sparse autoencoders and temporal sparse autoencoders that leverage the sequential nature of language for interpretability. I focus on how sparsity can be used to extract interpretable features that capture semantic rather than merely syntactic information.

My past work has focused on establishing rigorous algorithms with optimal sample complexity and fast convergence rates for fundamental machine learning and signal processing problems, including sparse regression, matrix completion, and noise-blind problems. I have also developed rigorous uncertainty quantification methods for high-dimensional problems and explored connections between overparameterization and classical optimization. I’m also passionate about applying these techniques to practical domains such as healthcare (particularly medical imaging) and education. Beyond technical research, I actively collaborate with lawyers and policymakers on AI governance, including contributing to the G20 Summit policy discussions to bridge the gap between technical innovation and responsible AI deployment.

I had the privilege of completing my Ph.D. in mathematics and electrical engineering (summa cum laude) under the guidance of Felix Krahmer within the Optimization and Data Analysis group, while concurrently affiliated to the Information Theory group under the leadership of Holger Boche at the Technical University of Munich.

news

| Feb 7, 2026 | Our new mechanistic interpretability method, Temporal SAEs, is an Oral (top 1.18%) at the ICLR 2026! See you in the cidade maravilhosa! |

|---|---|

| Feb 7, 2026 | Our work on Out-of-Distribution (OOD) detection that exploits the Neural Tangent Kernel, GradPCA, was accepted at the ICLR 2026! |

| Sep 26, 2025 | Our state-of-the-art methods for watermarking LLM, HeavyWater and SimplexWater, got accepted at the NeurIPS 2025. See you in San Diego! |

| Sep 26, 2025 | Our paper Inference-Time Reward Hacking in Large Language Models was selected as a spotlight at the NeurIPS 2025. |

| Jun 19, 2025 | Our paper Leveraging the Sequential Nature of Language for Interpretability was selected as a spotlight at the ICML 2025 Workshop on Assessing World Models. |

| Jun 1, 2025 | Two new papers about sampling from LLMs and AI alignment. Soft Best-of-n and Inference-Time Reward Hacking in LLMs. |

| Apr 11, 2025 | Our paper about discretion in AI alignment got accepted into ACM FAccT and won the best paper award at the New England NLP (NENLP) workshop! |

selected publications

-

Inference-Time Reward Hacking in Large Language ModelsNeurIPS 2025 (Spotlight, top 3%), 2025

Inference-Time Reward Hacking in Large Language ModelsNeurIPS 2025 (Spotlight, top 3%), 2025 -

AI Alignment at Your DiscretionIn ACM FAccT (and Best Paper Award at New England NLP Symposium), 2025

AI Alignment at Your DiscretionIn ACM FAccT (and Best Paper Award at New England NLP Symposium), 2025 -

Optimized Couplings for Watermarking Large Language ModelsIn IEEE International Symposium on Information Theory (ISIT), 2025

Optimized Couplings for Watermarking Large Language ModelsIn IEEE International Symposium on Information Theory (ISIT), 2025